Hardware

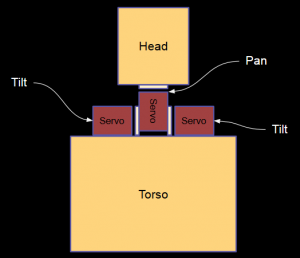

Structure

The physical structure of the robot consists of a head and torso with pan and tilt servos which control the head motion.

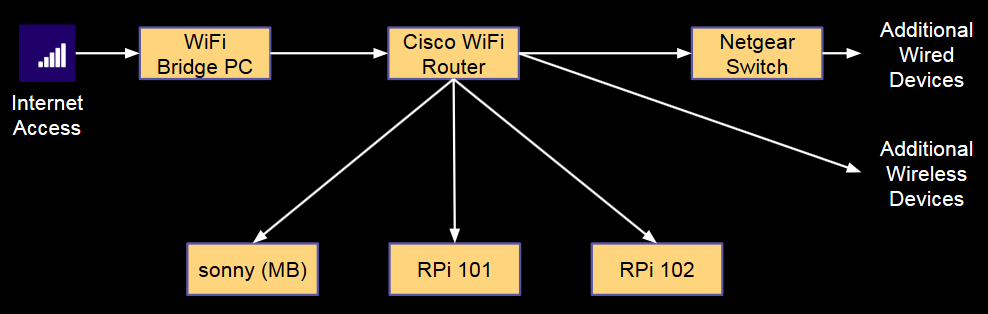

Network

The robot has an internal network to connect the internal computers and also allow external computers to be attached for development and monitoring.

Computers

Two Raspberry Pi computers (rpi101 and rpi102) are mounted inside the head. The main processing is performed on a separate motherboard (sonny).

Software

Robot Operating System

The software for the robot is built around the Robot Operating System (ROS) framework. ROS provides TCP/IP based decoupled, asynchronous communication between software nodes running on multiple computers. Both standard and custom message types are used to communicate everything from video streams to detected and recognized faces to text strings. Named message topics allow multiple publishers and subscribers to connect dynamically and share messages. In the following sections, the diagrams show the nodes and topics which connect the components of the system. Nodes are drawn with an oval, and topics are drawn as rectangles.

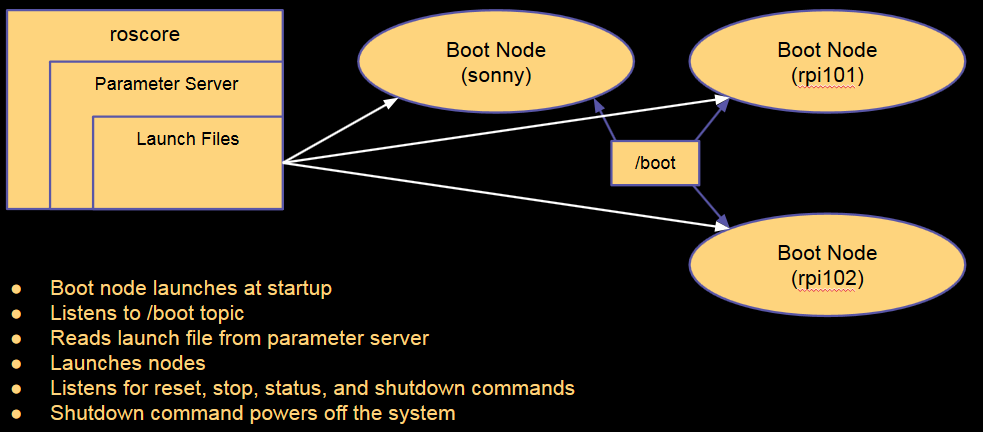

Startup and Shutdown

The boot node facilitates the startup and shutdown of the robot. This is a special node which is configured to start automatically when the computer boots. A copy of boot node runs on each computer and controls all the nodes which run on that computer. One computer on the robot (sonny, in the case of KEN) is the master and runs the roscore program. It also loads a set of launch files into the ROS parameter server when it boots. The boot node on each computer connects to the parameter server, downloads its own launch file, and runs it to start up the nodes. The launch files are matched to the computers by the MAC address of the network card on the computer.

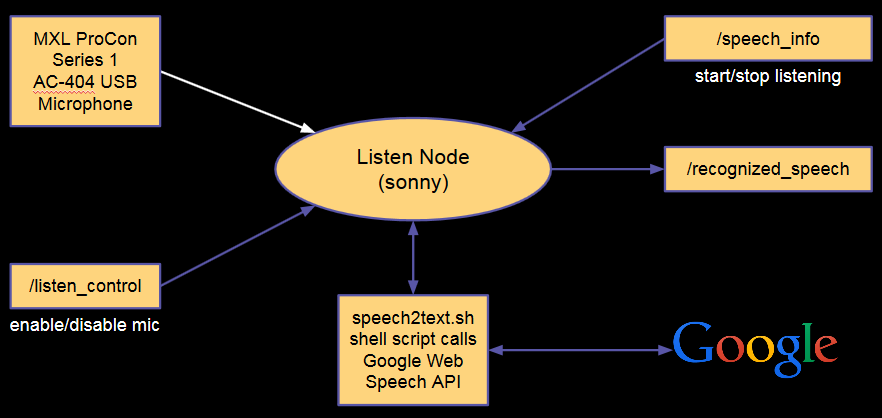

Listening

The robot listens through a microphone. The recorded audio is segmented into silence and voice segments by the listen node which transmits the recorded voice to a speech-to-text translation service. The recognized speech is published as a text string to be consumed by the artificial intelligence engine. Additional topics allow disabling listening manually and automatically while the robot is speaking to avoid feedback from the robot’s own voice.

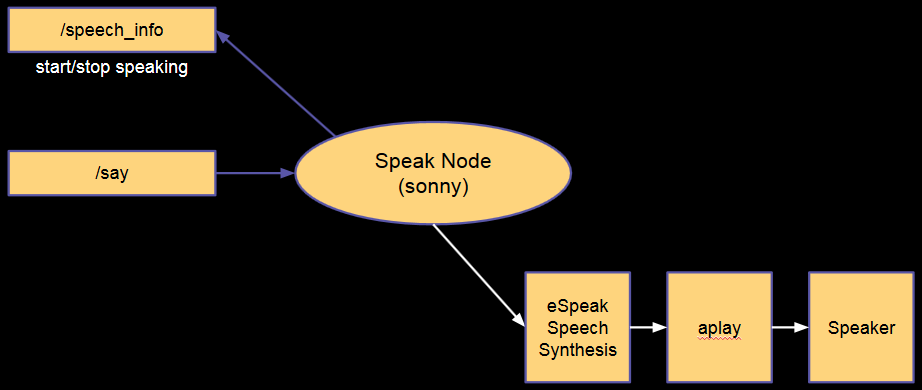

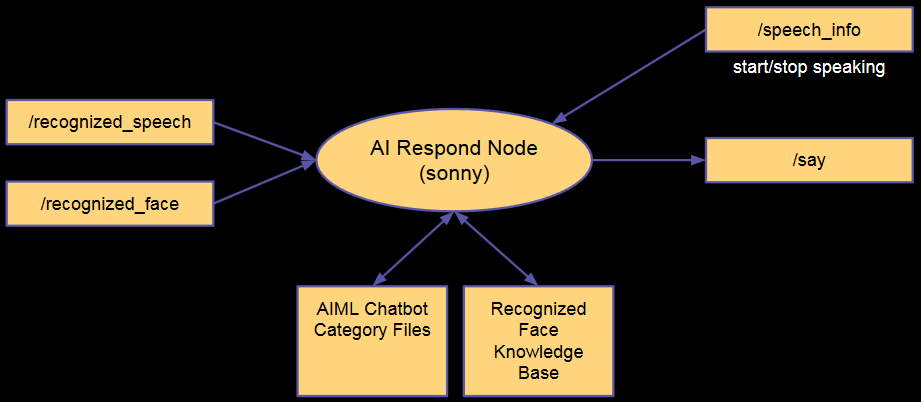

Speaking

The robot’s voice is produced by the espeak program. The artificial intelligence engine publishes the text to speak to the /say topic. The speak node also publishes control messages when it starts and stops speaking to allow other parts of the system to pause processing, such as listening, while speaking is happening.

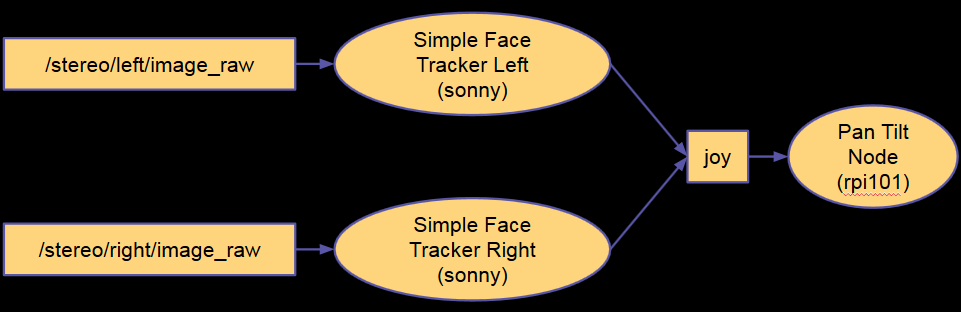

Vision – Face Tracking

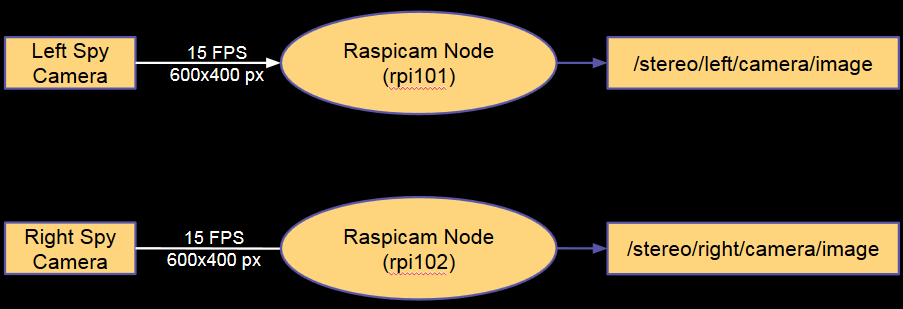

Two cameras embedded in KEN’s eyes provide video streams which are analyzed to find faces.

The video from the cameras is captured on the Raspberry Pi computers and published out on left eye and right eye specific topics.

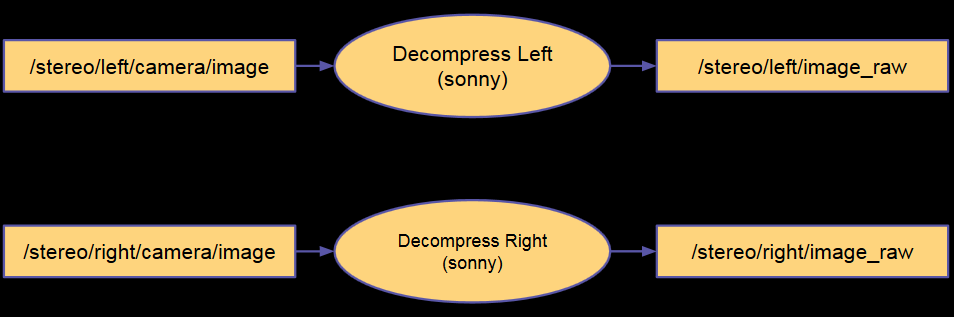

The image streams from the cameras are decompressed into raw image streams. This allows ROS nodes written in python to access the video frames.

The simple face tracker uses OpenCV to process a Haar cascade on each image frame. If a face is found, joystick direction messages are published to move the head to center the face in the robot’s gaze. The position in each eye which corresponds to a direct stare from the robot is calibrated and stored in the launch file.

Vision – Face Recognition

Face recognition is performed by first detecting faces in the incoming video streams from the robot’s eyes. The detected faces are identified by the camera, image frame, and coordinates of the face and eyes.

The detected face is cropped and normalized to a 30×30 gray scale image.

The detected faces are processed by the recognize face node and identified as either recognized or unrecognized. Depending on this determination, the detected face message is forwarded to an appropriate topic.

The learn face node processes unrecognized faces. It stores information about the faces in files in a facedb folder, and once it has gathered sufficient information, it publishes a new learned face. The recognize face node listens for learned face messages and loads them into its recognition engine. This allows the robot to do unsupervised, online learning of new faces.

Artificial Intelligence

The artificial intelligence engine links the listening, speaking, and seeing capabilities of the robot into a functional whole. Input is analyzed and mapped to a response by Artificial Intelligence Markup Language (AIML) rules. A knowledge base of names and faces allows the robot to recognize and greet a person who has been seen before.